Artifacts that Understand English

Part of designing for how minds work means engineering smarts into machines. This lowers the cognitive load on humans by giving the artifact itself the functionality for doing basic mental tasks. For example, complex office copiers have simple on-board smarts to help us troubleshoot paper jams, Bankers using expert underwriting systems give loan decisions in minutes and video games can read our frustration level and dynamically adjust the difficult of play.

Today’s machines are getting pretty good at perception, decision making, natural language processing, autonomous navigation in rough terrains, planning and a wide range of activities that involve what we normally call intelligent. We are sneaking up on AI.

So cognitive designers must watch out for any new smart functionality that can be embedded in the artifacts they design. Along these lines, Cognitive Technology recently made an important announcement.

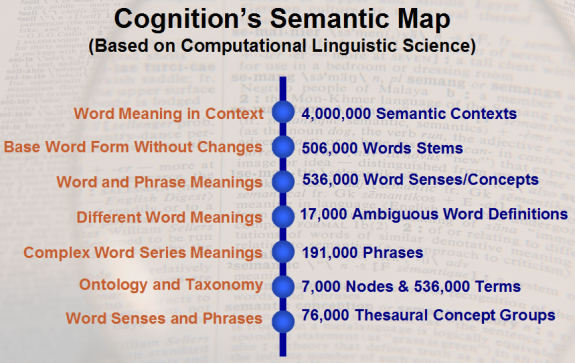

“We have taught the computer virtually all the meanings of words and phrases in the English language,” Cognition chief executive Scott Jarus told AFP. “This is clearly a building block for Web 3.0, or what is known as the Semantic Web. It has taken 30 years; it is a labour of love,”Jarus said.”

They call the product semantic map and you can try it out on their website to search Medline (health), a giant database of legal decisions and Wikipedia. An overview on how to try it out can be found in this video.